Automated customer support with GPT-4, but it's actually good

Since the GPT-related AI hype started, I have dismissed it as a gimmick. When DALL-E 2 was announced, I tried it, made a few fun pictures, and moved on. When GPT-3 became available, I tried it, made a few completions, and moved on. When ChatGPT and even Bing AI were announced, I couldn't understand who would spend hours talking to them instead of just googling something.

Sure, I could use it to generate a short Father's Day story about Darth Vader and Luke Skywalker, one that incorporated their backstory correctly and the culture of the time.

I'm glad we were able to spend this day together," Luke said, a smile on his face."As am I," Darth Vader replied, placing a hand on his son's shoulder. "I am proud of the man you have become, Luke. You have grown strong in the ways of the Force, and I am honored to be your father.

But who was actually making productive use of this? I had access to the most capable AI models in the world and couldn't find any practical use for them. Even the code generation, while good, was frequently more hassle than just writing the code myself.

Recently though, I've been working on a new project and decided to use ChatGPT to generate a few ideas for a sample pitch deck and marketing content. ChatGPT performed admirably. The one thing I learned is that it is incredible at coming up with ideas for content. Stuff that you can do some minor editing and slot in.

Like a junior content writer.

But it wasn't living up to the hype for me.

What if it could act as a customer support person?

With the announcement of GPT-4, which includes an expanded context window (meaning it can keep longer conversations in memory while chatting), and the system prompt (which allows you to specify rules for the AI to follow separately from the conversation), I wanted to know if I could use it for customer support for one of my clients (a self-hosted invoicing app) and how it would perform. I would never want -automated- responses, but I figured I could have it suggest reasonable responses to support tickets. Our team can spend 5 seconds reviewing suggestions and hitting "send" if they're OK. It would save a lot of time, especially for long-running tickets with lots of context. The AI could grasp in a few seconds what it would take us minutes to read through and process.

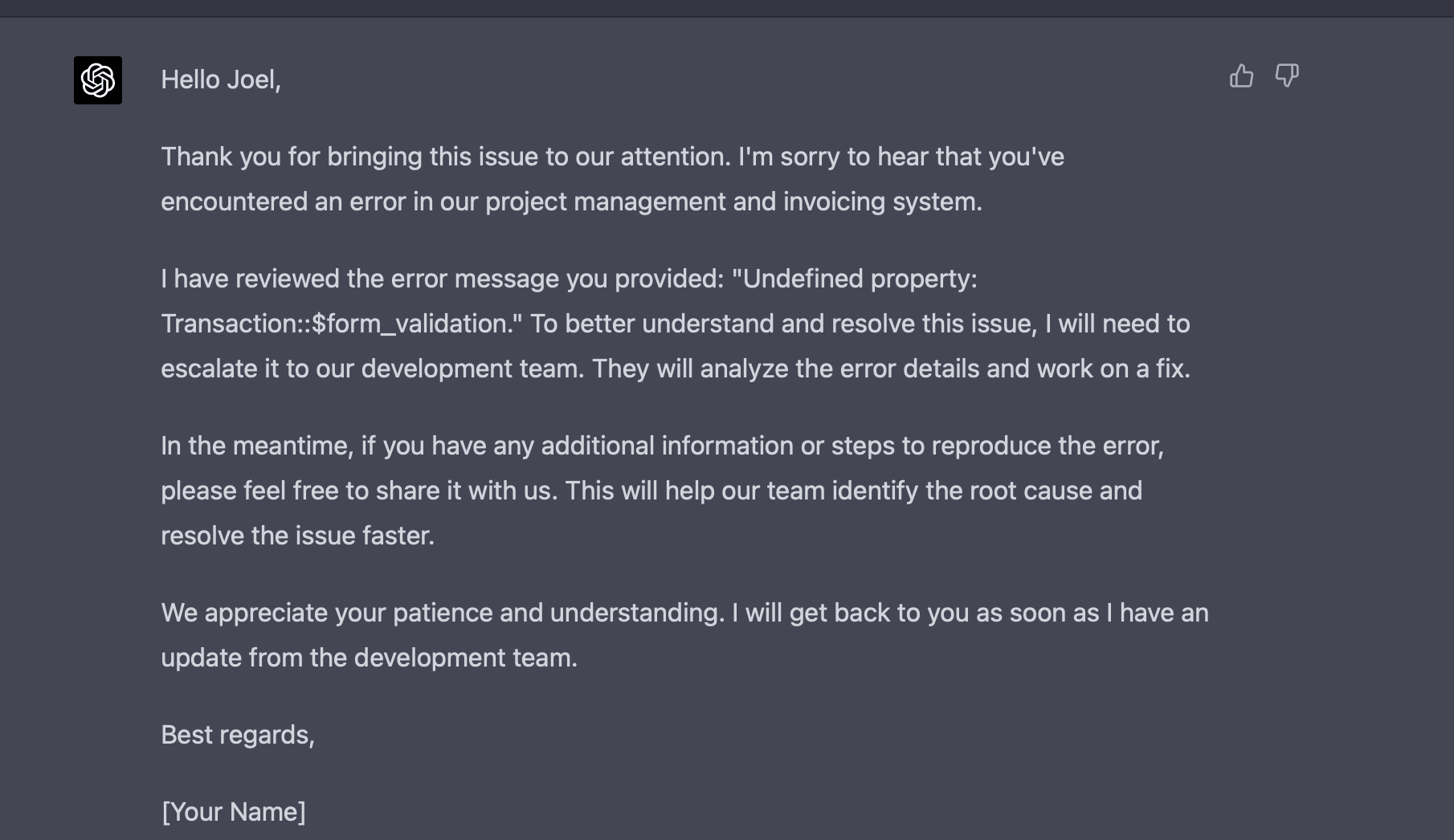

Let's set out to investigate if it's possible. I have a sample bug report for testing. I can start by giving ChatGPT (where I have access to GPT-4) a simple prompt, asking it to act as a customer support agent, and providing a few details about the system and our policies (e.g. if it's a bug, tell the customer you will escalate it to the development team, if it's a feature don't promise we're building it, etc.)

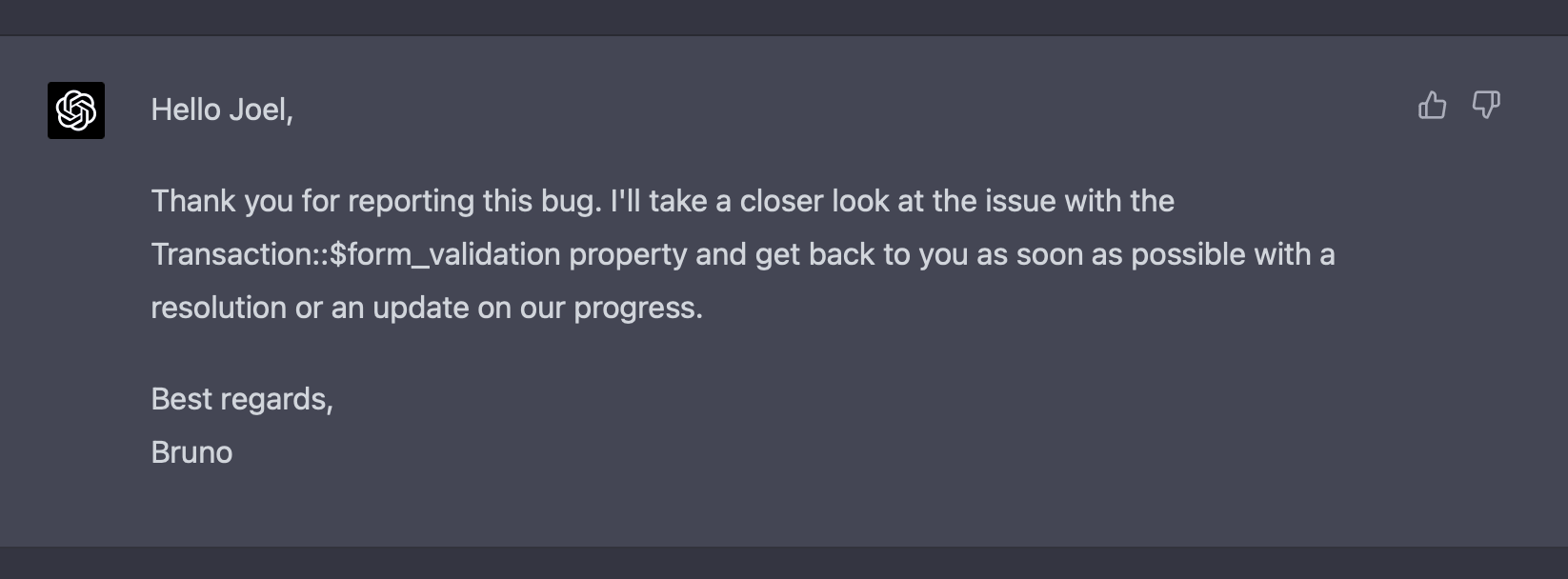

It works, but it could be better. It's very formal and breaks tradition with how we do things: We don't have customer support staff; the development team is all responsible for customer support issues. Doing it that way is a great way to inform developers of frequent customer pain points. It also leads to higher-quality customer support since a developer usually knows what's going wrong and how features should behave.

Act as a software developer triaging tickets

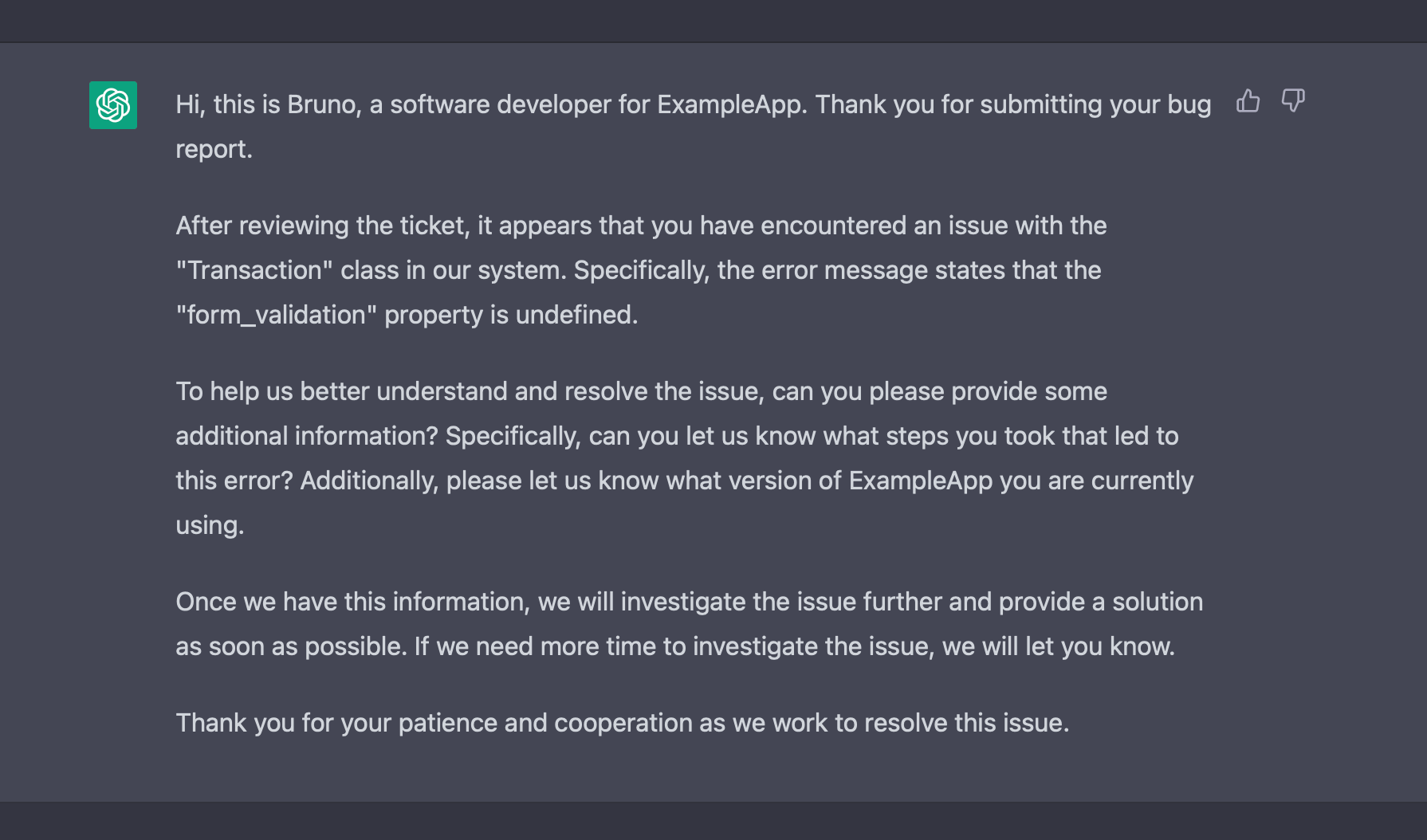

Let's ask GPT to pretend to be a developer, not a generic customer support person. Not just a developer, though: me. I want GPT to act as me, so I can use its generated replies as my own. During testing, I can alternate between GPT 3.5 and GPT 4 to ensure I don't burn through my message cap and to see how each version fares.

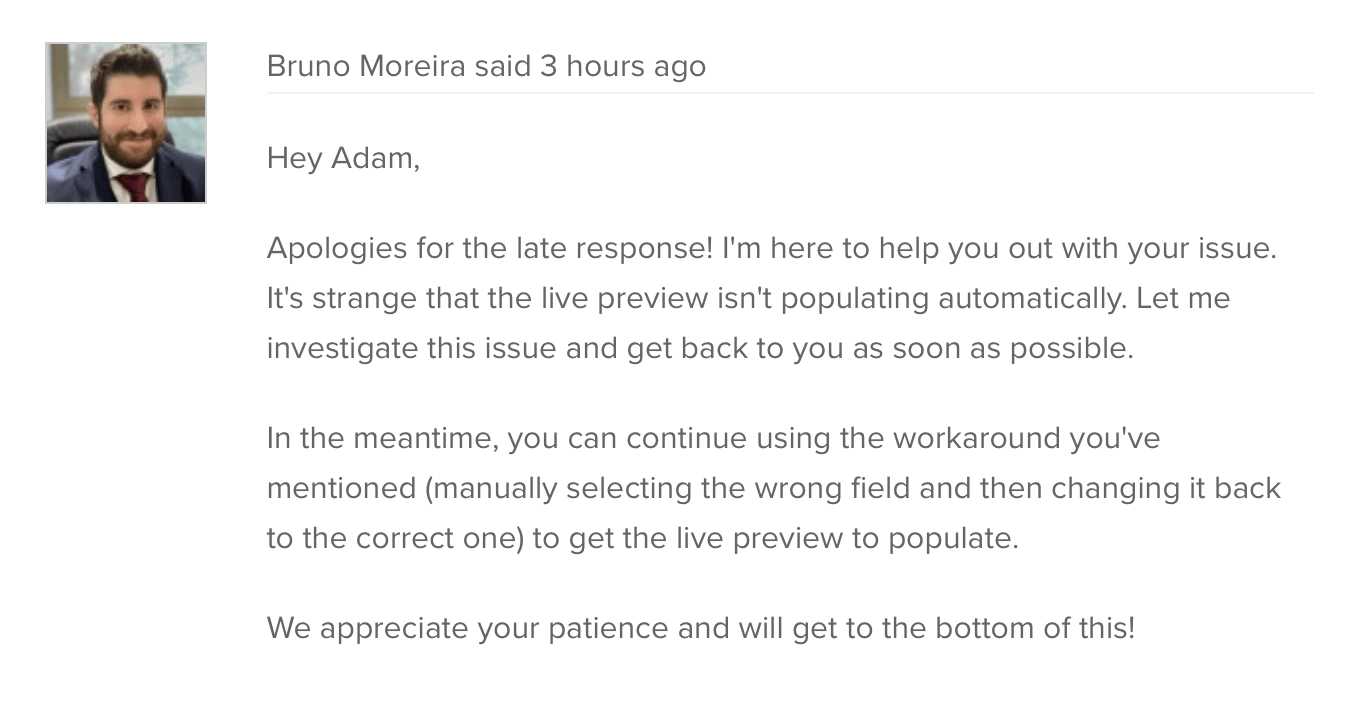

The software developer persona seems far more knowledgeable about what is going on.

But I don't like that it's introducing itself. The customer already knows it's Bruno. These conversations happen in our support ticket system; my name and picture are on the page! Let's try a few variations of the prompt until we nail it.

Having gotten through that, we can move back to GPT-4. The experiment is a success.

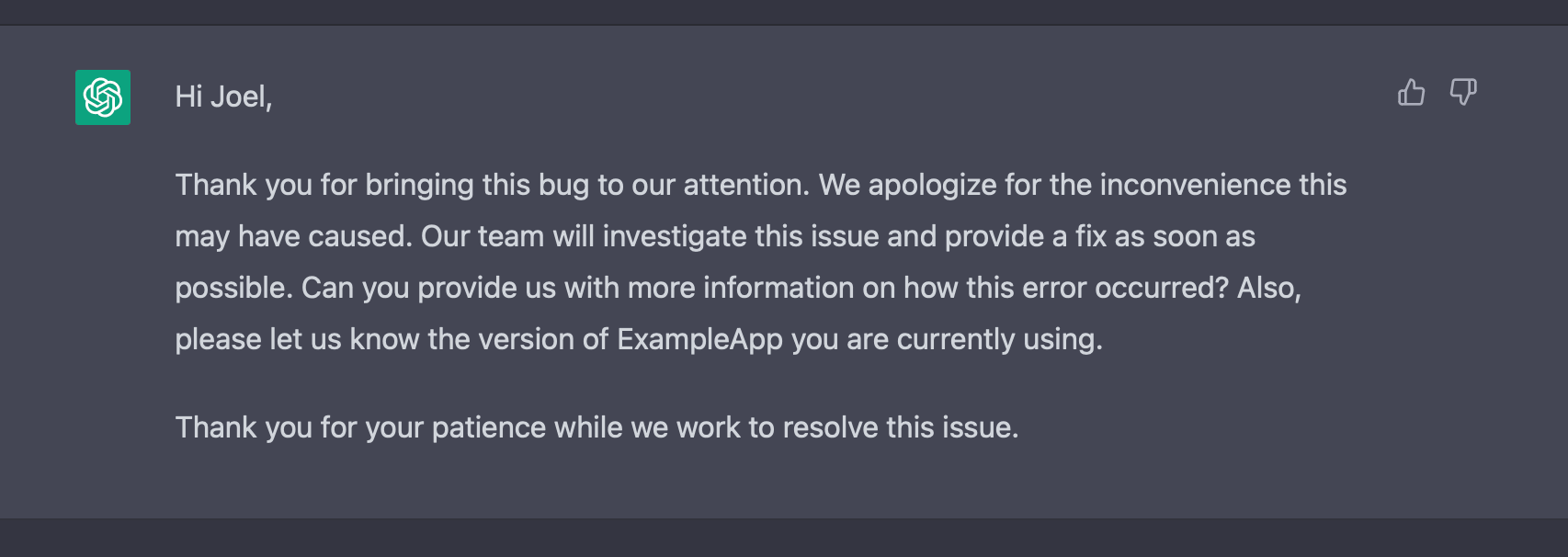

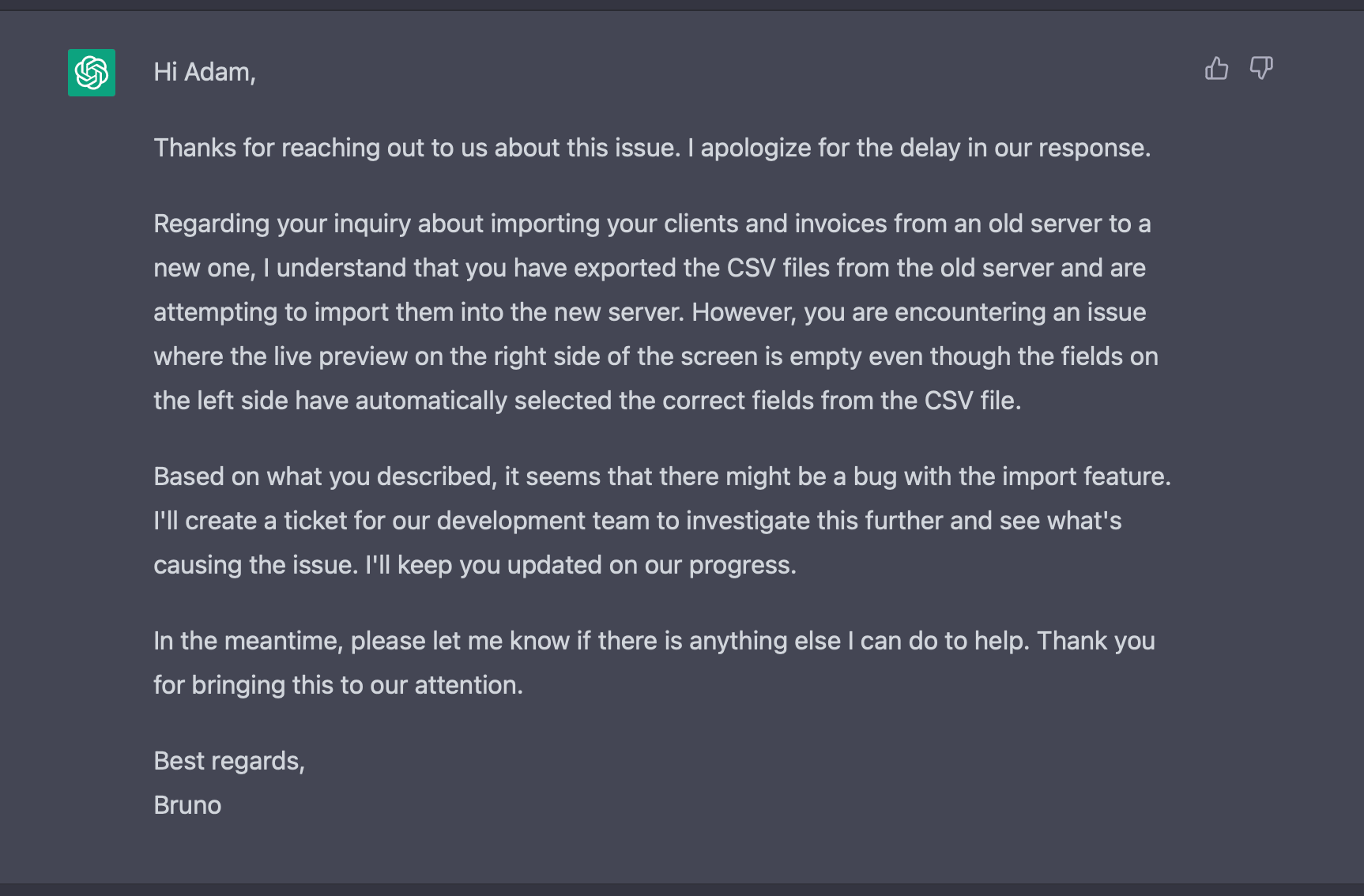

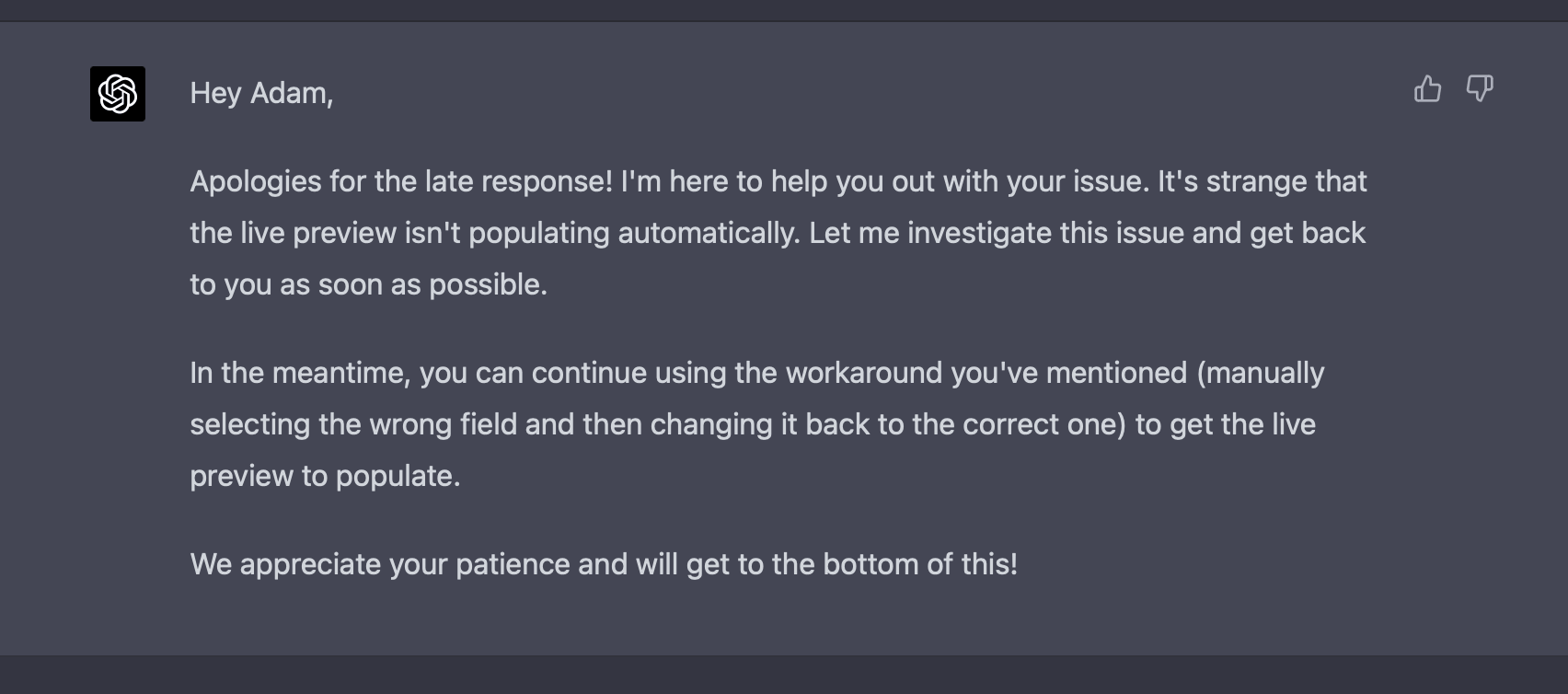

Now it's time to feed it an actual ticket with actual responses. ChatGPT handles it well, but there is a dramatic difference in the quality of GPT 3.5 and GPT 4, enough to convince me that regardless of the cost of GPT-4, it has to be the model I use.

With GPT 3.5, it's decent.

With GPT-4, it's fantastic, and it even notices that the customer had found a workaround and had been using that in the meantime.

Integrating with the support system

Coincidentally, right when I was messing around with ChatGPT, I got an email letting me know I now had GPT-4 API access! With that, I can pull everything I've done into our support system and integrate GPT-4 into our response textarea.

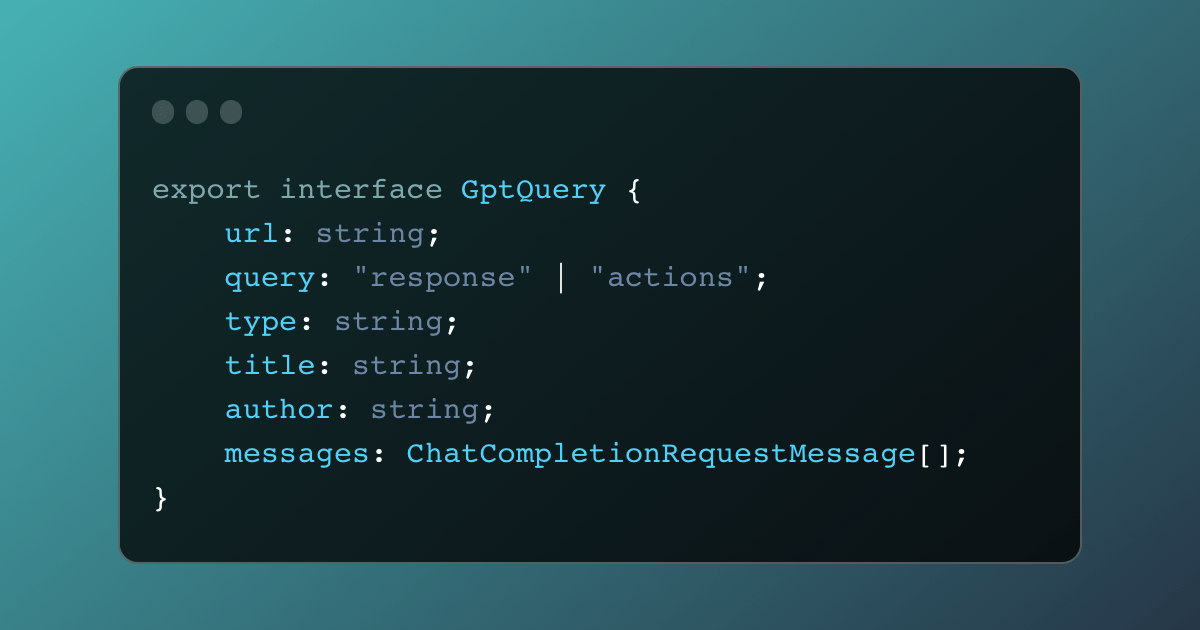

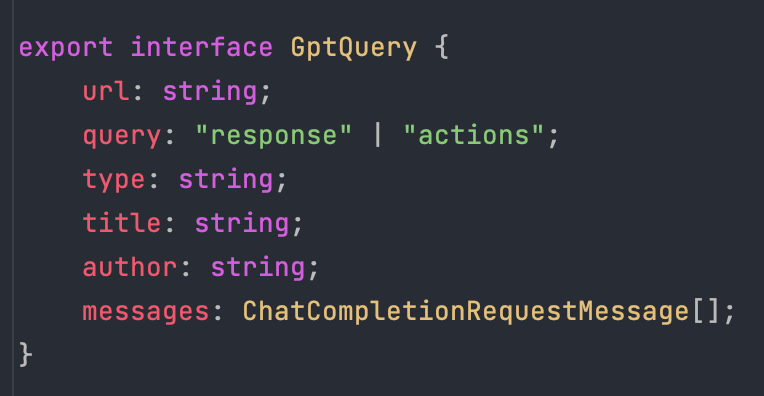

This will be a local integration; no editing of the support ticket system's code is involved. I can start by building a simple express server that receives requests containing a ticket's details. That server will send those details to GPT-4, along with a prompt telling it what to do, and return the generated response.

Once that's done, I can write a little bookmarklet that extracts the ticket's details (title, author, ticket type, URL, and all the messages in the ticket, both from the team and the customer) and submits them to the express server. Easy enough.

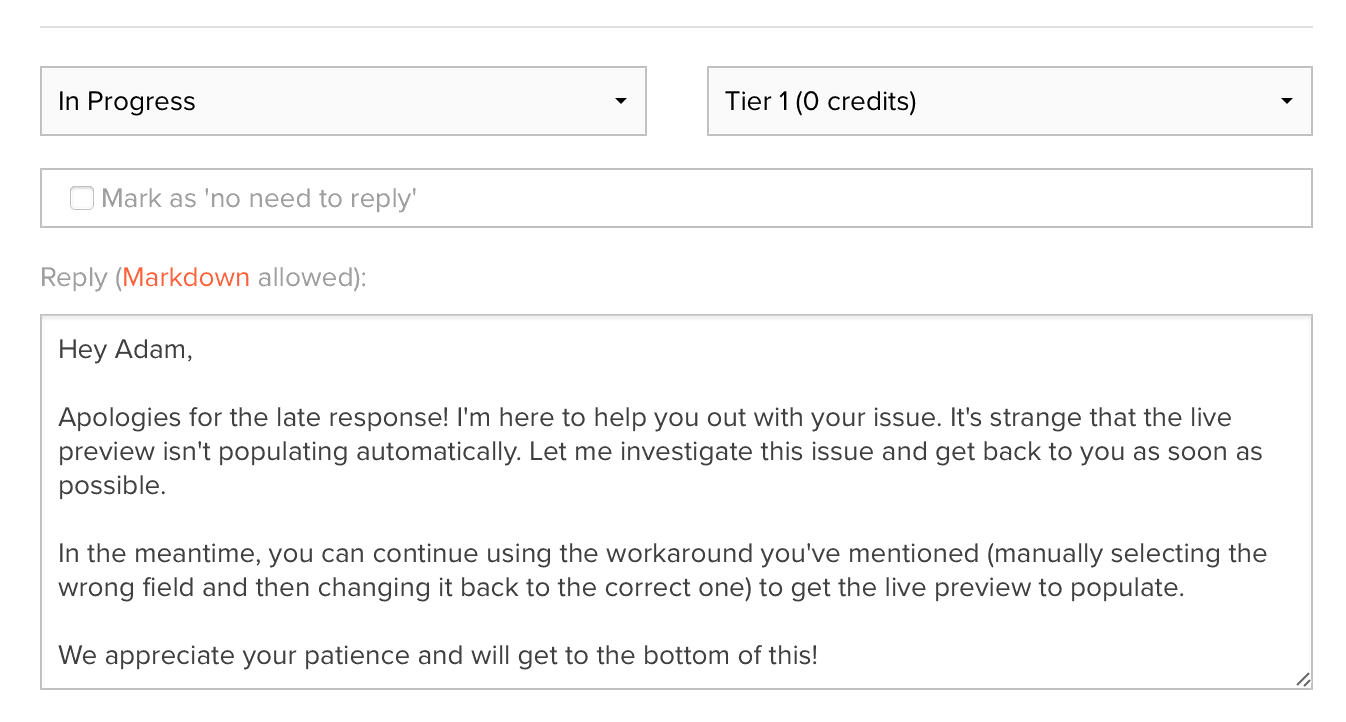

The bookmarklet sets the response textarea to "Generating… " to visually indicate what's happening. Once it receives the response, it dumps that into the textarea. I can read through the response, ensure it looks good, and submit it.

With that, I have fully functional GPT-4-powered responses and can start making my way through customer support tickets. But what happens when GPT-4 gets through 100 or 1000 tickets? Who does the actual admin on them? Who raises tasks for the development team, and who keeps track of what needs to be done for each ticket?

GitHub Issues, powered by GPT-4

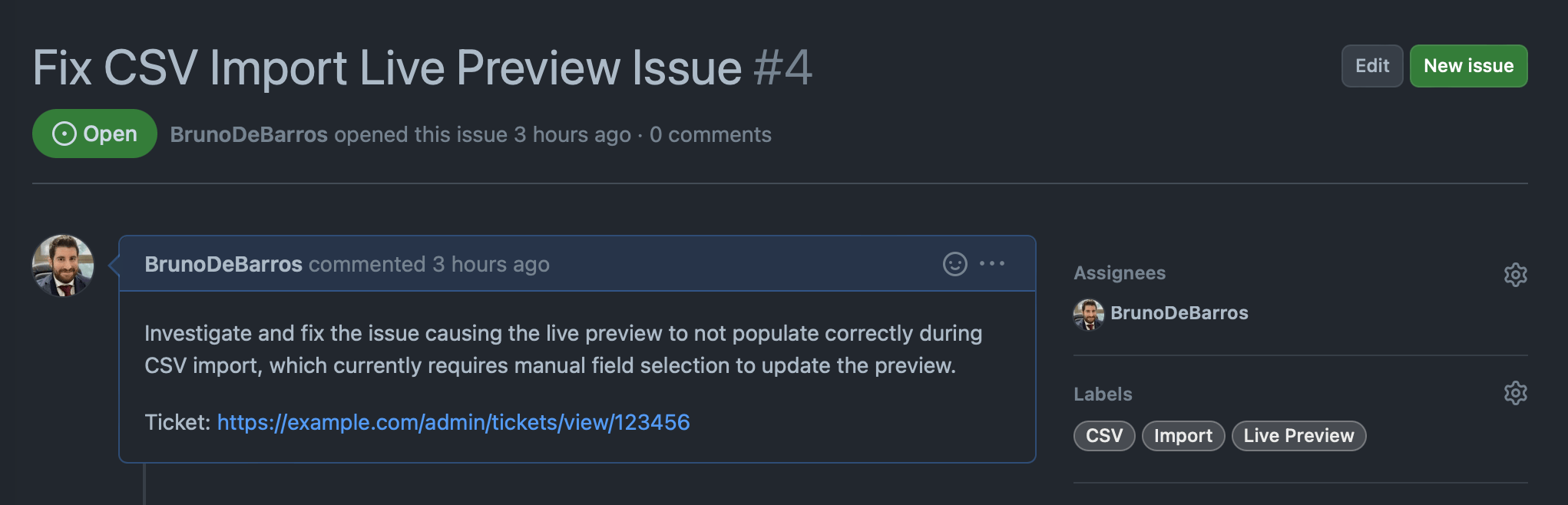

The answer is a second prompt. This second prompt receives the same ticket data, but instead of generating a response for the customer, it generates a GitHub issue.

This is the key.

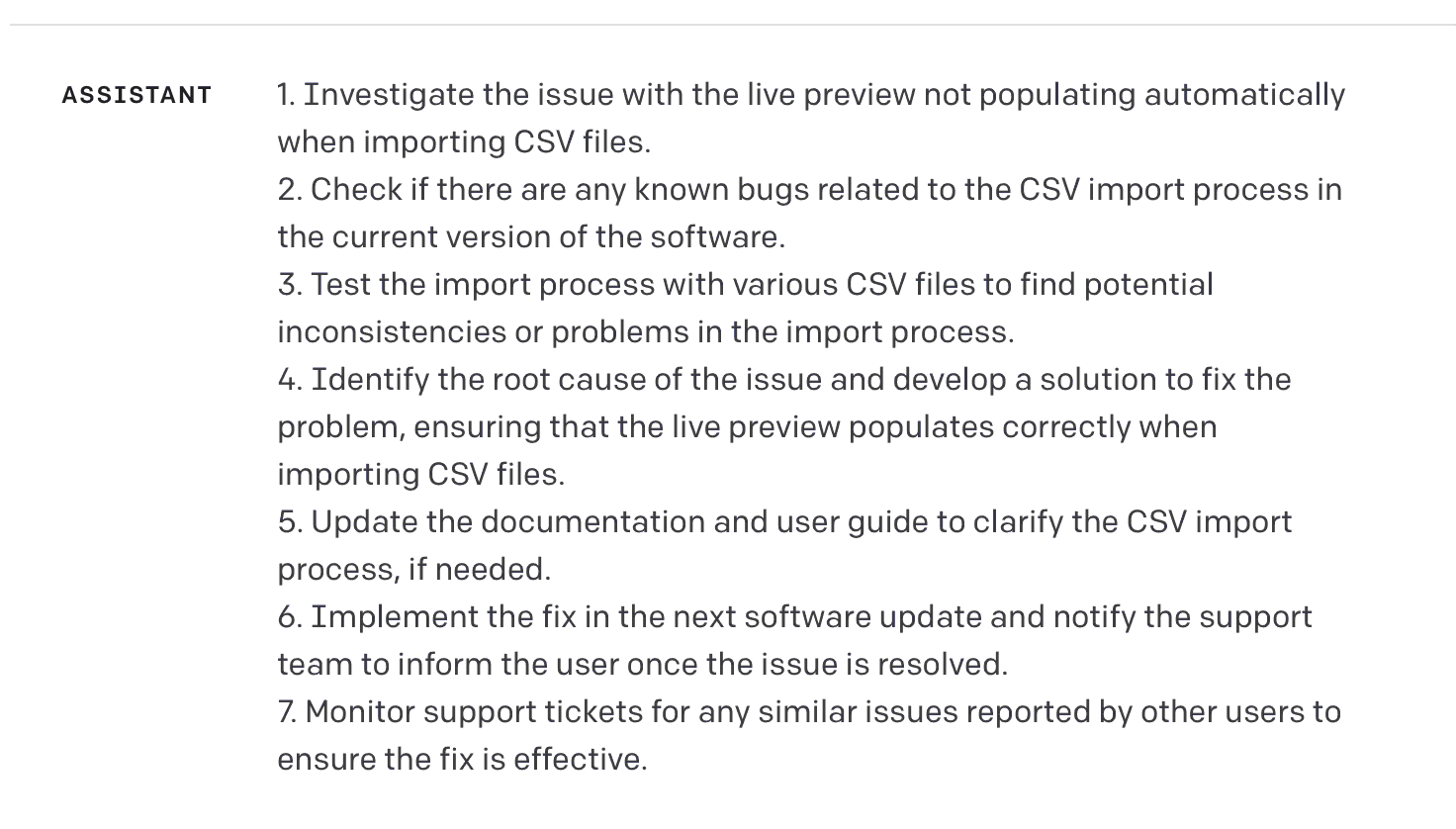

I start by asking it to come up with a list of action items for the development team. It very clearly succeeds at it:

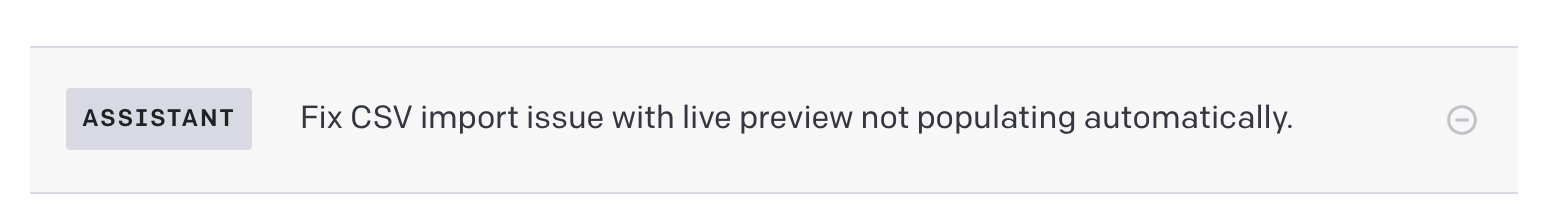

But it's too detailed and far too involved. We don't need anywhere near that level of detail; most of this stuff goes without saying. We just need a quick summary of the issue. So let's change the prompt to give a single-sentence title of what the developer needs to do.

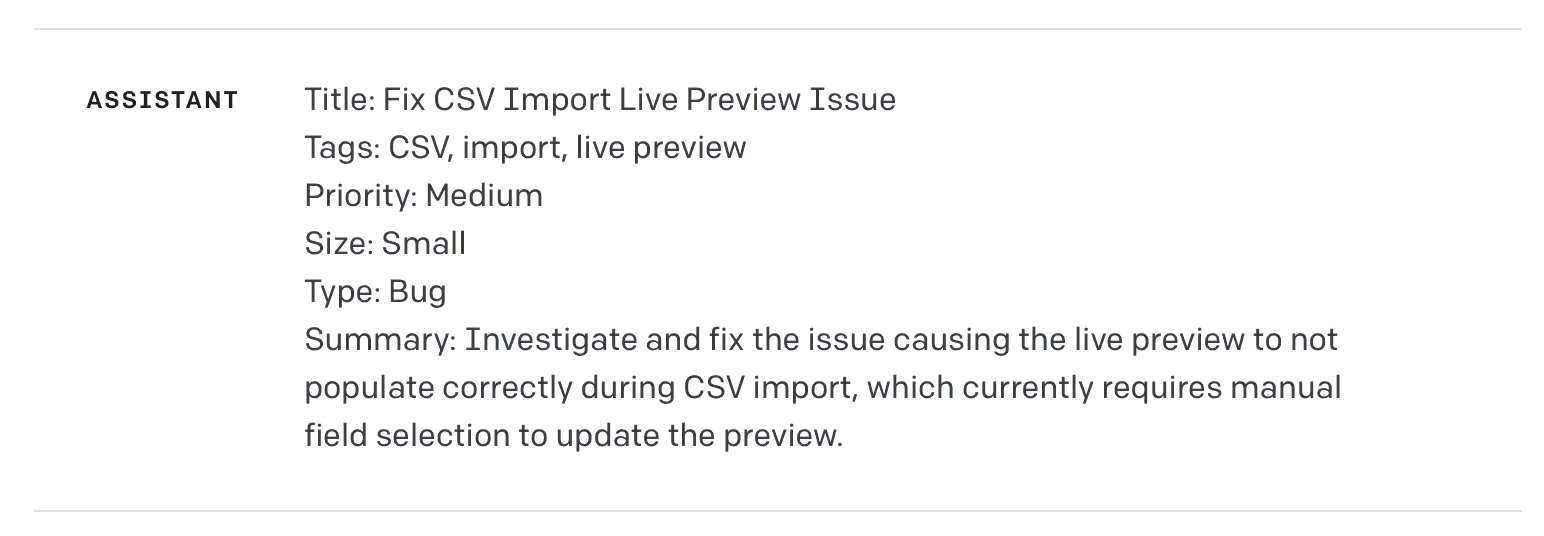

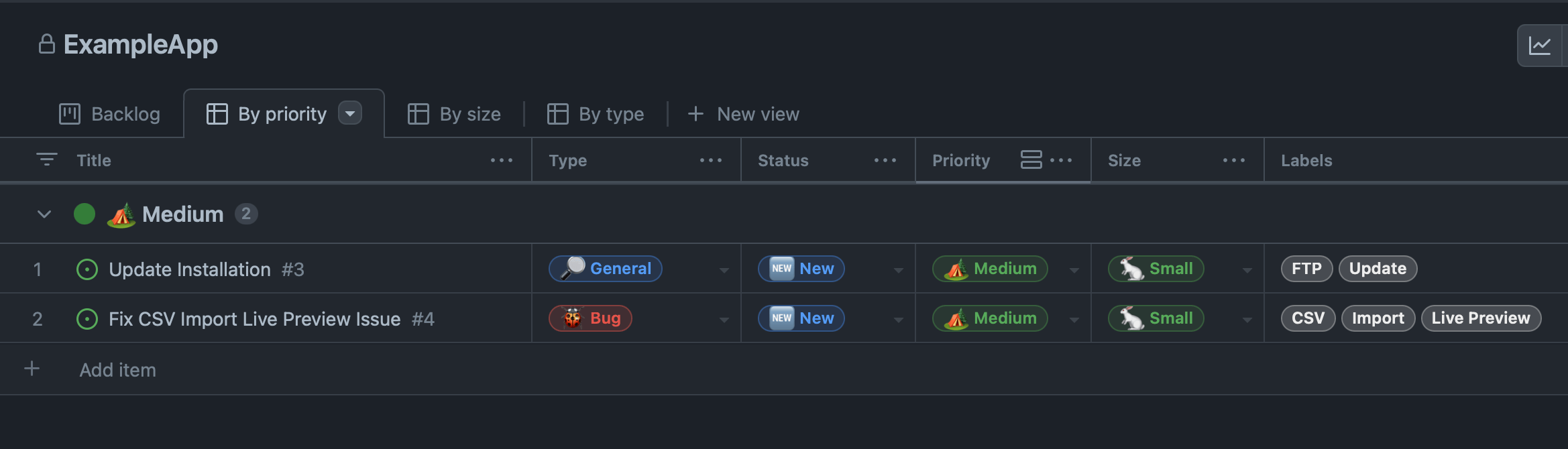

That's a lot better. And from that, we can now raise GitHub issues. We also need a few extra things to integrate with GitHub's project management tools (type of issue, priority, estimate, and some tags for good measure). Let's do that:

This is perfect. We can extract all the generated information with a regex and use the GitHub API to raise a new issue with all the necessary detail. If the response doesn't match the regex, we can re-generate it until it does. Thankfully the GitHub API is relatively easy to work with, so we can quickly get this done.

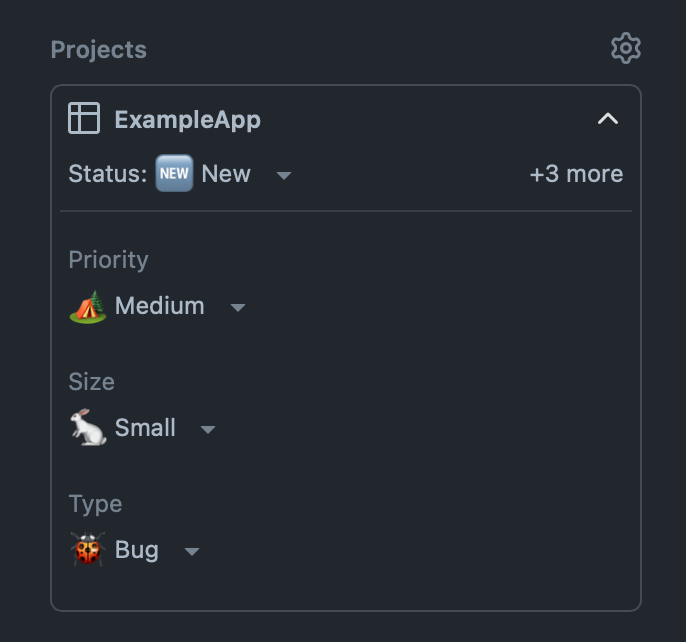

We can even use GitHub's Projects to add additional metadata and simplify task management.

What if there is no way for GPT to know the right answer?

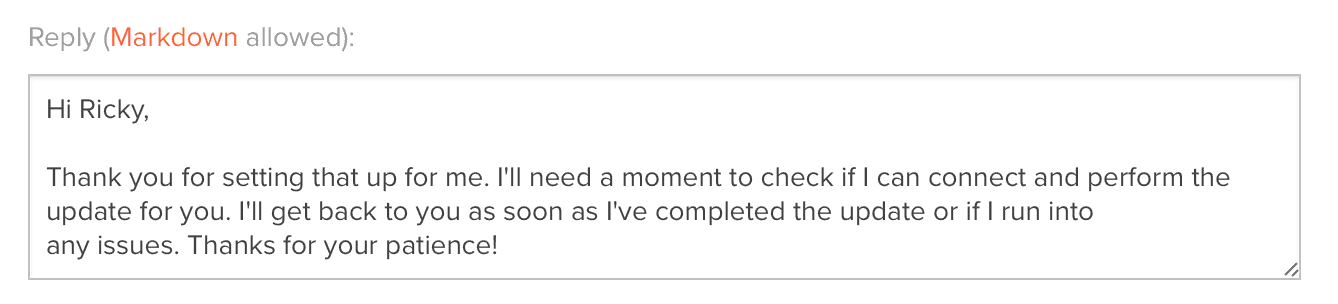

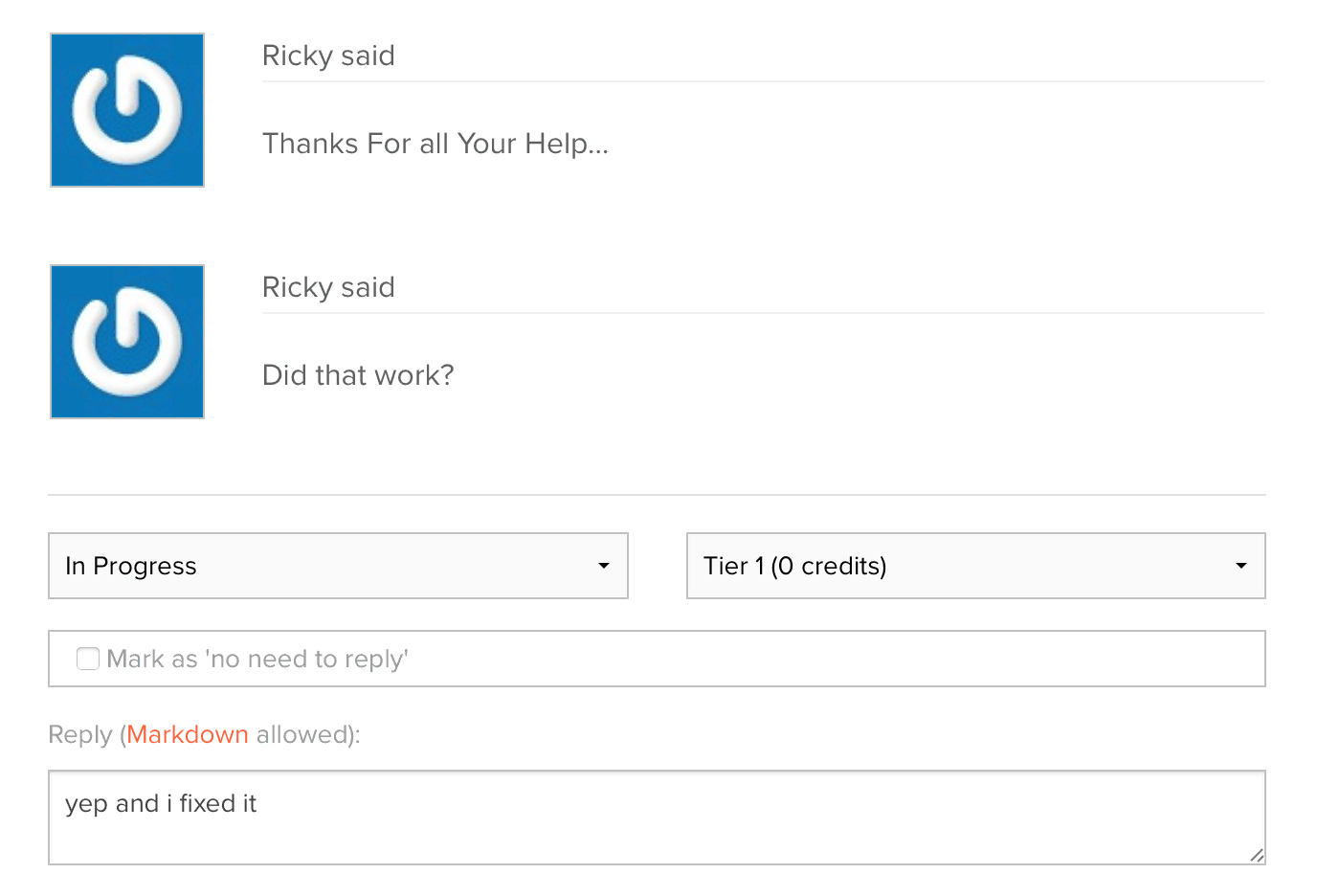

While going through some of the existing open tickets, I realised that sometimes, the AI just doesn't have a way of knowing what the answer is. What if a customer gives you access to their systems so you can help them out? If they ask, "are you able to connect?" how could the AI know?

It's good, but it's just stalling. And worse, it's stalling in a time-sensitive way ("I'll need a moment"), meaning that a developer needs to take action immediately. Not ideal. Sometimes it will also hallucinate a response ("yes, it worked!"), which is not something I can send without verifying.

To fix it, we can repurpose the response textarea. I can make it so that anything I write in it gets sent to GPT, and instead of asking it to generate a response from scratch, I ask it to merely edit whatever I wrote.

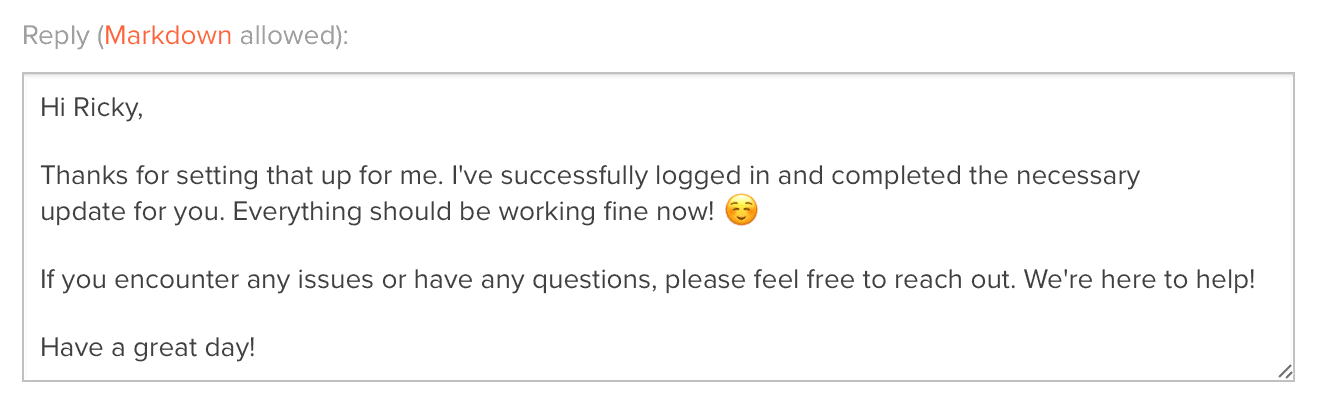

As you can see in the screenshot, my response couldn't be terser. But it does the job. It tells GPT-4 that the information was correct and the ticket was resolved. So now, not only can it come up with its own responses, but it can also be guided by me with just a few words.

Final Result

I am a convert. I now understand the hype, and I cannot believe how easy it was to integrate something so powerful into an existing system and how useful it actually is. It's not replacing the humans involved in the process but 10x'ing them.

When I open a ticket, GPT-4 now generates a thorough response for the customer and stashes the ticket's details in GitHub. Customers get timely updates on their issues, and tickets are summarised and categorised automatically, allowing developers to do what they do best: Solve problems.

What's next?

I've been running this through some of our existing tickets, and the most critical issue that has come up is, of course, GPT's lack of knowledge of how we do things. In the screenshot above, a customer needed help updating their self-hosted installation. The original prompt led GPT to suggest things that the customer could try. In reality, if someone explicitly asks us to step in and help, we are happy to! I added that extra context to the original prompt, and now responses are exactly what we would want them to be.

As we move forward, other frequent issues that can be added to the system prompt will pop up, making the generated responses much more helpful. In a way, it is similar to teaching a new employee all the policy details of the business.

And there are also other things that can be done to improve this system.

One is detecting whether the ticket is resolved, so we close a ticket with a resolution message after enough time has passed ("It's been 2 weeks, we haven't heard anything more, and the customer seems happy with the resolution"), or follow up if we're still waiting to hear back from the customer and the issue is not resolved.

The other is how the customer is feeling throughout the entire exchange. Imagine automatically tagging tickets by feeling and flagging negative feelings for deeper human review.

I might actually build a new customer support product based on this. There is just so much to explore!