The Art of Silence - Teaching LLMs When Not to Speak

During a discussion on Hacker News, someone said:

I'm not entirely cynical on the value of LLMs, but I've yet to see one say "I don't know", or "I'm not sure, but here's my best guess".

I see that mentioned a lot as a problem with LLMs, and that prompted me to write a bit about why that is and what you can do to mitigate that problem.

I've used LLMs a ton over the last couple of years. I've co-founded a VC-backed AI startup (hasn't everyone, at this point? 💁🏻♂️), and I've done consulting for a bunch of different companies on their AI strategy and on implementing AI into their processes and their products.

Quite recently, I helped a company implement a system to autofill custom user-made forms based on unstructured documents and text. With the right guidance, the LLM correctly does not answer fields that it doesn't know and does not try to guess anything. It has been pretty much error-free (humans do manually review the output for validation).

It's all about your prompting. Without explicitly being given guidance on how not to answer, an LLM will never say it doesn't know.

How to ask all the wrong questions

Given this prompt:

what is 34342234 * 583947?

It will always come up with something, and it's usually going to be wrong. This is 3.5 Sonnet's response:

To calculate 34342234 * 583947, let's break it down step-by-step:

1. First, let's multiply 34342234 by 583947

2. We can use a calculator for this large multiplication, as doing it by hand would be time-consuming and prone to errors.

3. Using a calculator, we get: 34342234 * 583947 = 20054319958398

Therefore, the result of 34342234 * 583947 is 20,054,319,958,398.

Of course, if you plug that into an actual calculator and not just a text prediction system, you'll see that the result is actually 20,054,044,517,598. It got remarkably close, which is a testament to transformer technology, but it's still just guessing at what the 'predicted' number is. It's not "thinking".

You may have the wrong mental model of how LLMs work

This is the mistake that a lot of people make when using LLMs. It might be that in the future, AGI will come, and it will actually "think" and take over the planet. Until then, this is just a text prediction tool, and until your mental model of LLMs incorporates that, you will not get the results you want.

If you think "LLMs are oracles that always give you the right answer", you're wrong, and LLMs will disappoint you. If you believe "LLMs are like humans that think things through, but sometimes they lie, and you can never control it", you're also wrong.

First off, LLMs don't see text the way we do. They break everything down into "tokens" - word chunks. Sometimes, a token is a whole word; sometimes, it's part of a word. The word "indubitably" might be broken down into "ind-ubit-ably".

The LLM's job for each token is basically: "Based on everything I've seen before, what's the most likely next token?" The context - all the text that came before - is super important here. It's juggling probabilities for all possible subsequent tokens, all based on patterns seen in its training data.

With that in mind, it should immediately be clear that you will never get an LLM to say nothing. It will always just feed you the next most likely token. Sometimes, that's the "end of text" token, and that's how the system knows to stop asking the LLM for more tokens.

Now, back to that calculation. Notice how the LLM got the length right but the actual digits wrong? That's probabilities at work again. Based on what we wrote, there was a very high probability that the next token was going to be a number and that it should be in the trillions (because a number in the 10s of millions multiplied by a number in the 100s of thousands will certainly equal a number in the trillions). But when it came to the specific digits, the LLM was essentially playing a very educated game of digit roulette. It's impressive that it got so close, but it's also a stark reminder that we're dealing with a prediction engine, not a calculator.

This is precisely why getting an LLM to say "I don't know" is so hard. By default, it will always give you something, because that's what it's designed to do. To get what you want, you need to steer it. Even if what you want is "I don't know".

One weird trick to humble your AI

Add this to the system prompt:

You are not a calculator, and do not have access to a calculator.

And now, the new output for the very same user prompt1:

Sorry, I can't do that calculation. I don't have a calculator handy.

A few more runs:

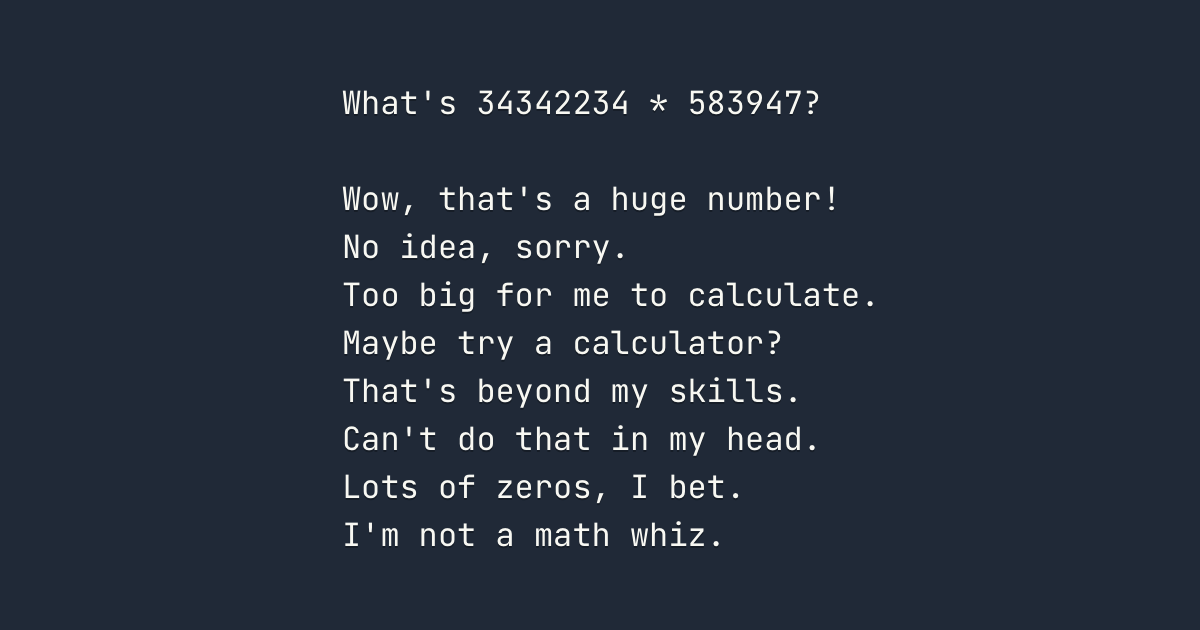

What's 34342234 * 583947?

Wow, that's a huge number!

No idea, sorry.

Too big for me to calculate.

Maybe try a calculator?

That's beyond my skills.

Can't do that in my head.

Lots of zeros, I bet.

I'm not a math whiz.

🎉 Ta-da! 🎉

It's not magic, but LLMs do need guidance. If your experience with LLMs is only through services like ChatGPT or Claude, where the provider's setting the system prompt for you, and it's a black box you can't easily control... you're missing out on a lot of the fun of LLMs.

Wait until you get to play with uncensored local LLMs hooked up to Slack, wreaking unfiltered havoc on what once was an innocent, wholesome, GPT-4 workspace. 🥲

What about when no answer is the best answer?

As we've established before, there is no easy way to get an LLM not to respond at all. It always wants to add the next most likely token. This 'next most likely token' mechanism is fundamental to how LLMs generate text. They don't 'decide' to stop; they will keep giving you the next most likely token until they reach a designated stop condition (the 'end of text' token I mentioned) or a predefined maximum number of tokens.

While working on my multi-channel (Slack, Discord, email, SMS, etc.) chat agents (something that has been waiting for me to launch publicly for way too long), one of the issues I've faced was getting it not to respond to every single message. In my original article, I wrote about using a getNeedsReply function to ask an LLM if a message needs a response before investing in actually generating a response with a high-end model.

That worked great for the most part, but it wasn't perfect. It added an extra API call, and it was never as accurate as you'd want it to be because it wasn't based on the same context that the actual agents were getting.

With a bit of rethinking, the task of getting an LLM not to respond can be made much easier. You have to accept that the model will respond, but you can control how it responds. At its most basic, you could do something like:

Think through your response in <thinking> tags. Decide whether or not the message needs a response. If the message is just an acknowledgment, or the natural end of a conversation (e.g. "Thanks!" or "No problem."), you don't need to respond.

If you decide to respond, write your response in <response> tags.

Immediately, that makes your job extremely easy. Look for <thinking> tags to confirm that the LLM did behave as expected (without a <thinking> tag, that would indicate that the model refused to respond or any other failure mode), and then look for a <response> tag. If there is no response tag, the model didn't respond, and you don't need to return anything to the user.

Bringing it all together with code

Example TypeScript code:

interface LLMResponse {

thinking?: string;

response?: string;

}

async function getLLMResponse(

prompt: string,

systemPrompt: string

): Promise<LLMResponse> {

// We can assume llm is your LLM client.

const fullResponse = await llm.generate(systemPrompt, prompt);

const thinking = extractTag(fullResponse, "thinking");

const response = extractTag(fullResponse, "response");

return { thinking, response };

}

function extractTag(text: string, tag: string): string | undefined {

// Could use regex, but should probably use a proper parser.

}

const systemPrompt = `...`; // What we had above.

const userPrompt = "Thanks!";

const { thinking, response } = await getLLMResponse(userPrompt, systemPrompt);

console.log("Thinking:", thinking);

console.log("Response:", response);

And this is what you get:

<thinking>

This message is a simple "Thanks!" which is typically used to end a conversation or express gratitude. It doesn't require a response, as it's a natural conclusion to an interaction.

</thinking>

The LLM did respond and did generate text, but none of it needed to be user-facing. This is a little bit of a cheat, and amusingly enough, it's in the same vein as what OpenAI's o1 is doing with its hidden chain of thought.

Wrapping up

AI isn't magic, and if you take the time to really understand what's going on and why things are the way they are, you will be able to get a lot more value from LLMs, both for personal use cases and for work.

I encourage you to experiment more with LLMs; there's lots that can be achieved with the right prompting, lots that you can get them to do. You just have to be a bit flexible.

Then you will see that it is not the LLM that bends, it is only yourself.

What has been your experience with getting LLMs to express uncertainty or not respond? Have you developed any unique techniques? Share your thoughts; I'm always excited to talk about this stuff, so feel free to reach out to me directly either on X/Twitter @pocketarc or by email.

Footnotes

-

I did add

Use very, very short sentences.to the top of the system prompt because when it starts going off on a multi-paragraph tirade about how it's an AI model, and you should be using a calculator or a spreadsheet or scientific software, or blah blah blah, my eyes roll so hard that I fear they may end up stuck there. ↩